How to design human-centric products in the era of AI-Powered Software

Businesses are transforming, with some creating AI-powered products and others using AI to stay competitive. For businesses that are dedicated to creating AI-powered products, the era of AI opens a realm of endless possibilities. These companies embark on a thrilling journey where AI becomes the beating heart of their creations.

On the other hand, there are businesses that have recognized the transformative power of AI can unlock new levels of efficiency, accuracy, and innovation. AI integration improves product functionality, decision-making processes, and user experiences.

As the floodgates opened, a world of possibilities emerged with the availability of APIs, libraries, and other AI tools. It seemed like everyone wanted a piece of the action and the result? The Wild Wild West of AI-powered apps that sprouted like wildflowers. For example, there are now over 500 new products utilizing the groundbreaking GPT-4 technology, with that number steadily climbing.

However, in this vast and rapidly expanding AI landscape, a crucial differentiator begins to take center stage—the user experience. With so many AI-powered apps vying for attention, the effectiveness of the user experience will ultimately set them apart. While the underlying technology may be similar across various products, it is how these technologies are harnessed, curated, and tailored to meet user needs that will determine success.

Alongside the promises of AI, businesses must navigate ethical considerations and ensure the responsible use of this powerful technology. Transparency, fairness, and user privacy must be paramount in the design and implementation of AI-powered products or the integration of AI into existing software. Many argue that Machine learning is pretty much a UX problem, and that is a statement we can stand behind.

So how can we craft AI-powered apps that not only dazzle with their capabilities but also provide meaningful value and a delightful experience?

Understanding Human-Centric Design for AI Software

Human-centric design is an approach that puts people at the heart of the development process. It studies human needs, behaviors, and emotions to create AI solutions that meet them. By incorporating human-centric design principles, AI products can deliver more effective, engaging, and user-friendly experiences that resonate with the end-users.

The Evolution of AI and Its Impact on UX

AI has grown in complexity with the use of natural language processing, machine learning, and deep learning. As AI continues to advance, it's crucial to ensure that user experience (UX) remains a top priority. By weighing the UX and AI relationship, developers can create AI products that are more accessible, enjoyable, and valuable to users.

When it comes to the user experience of AI, it is crucial to prioritize human decision-making. An AI should serve as a tool that considers personal context, offering users multiple reference points and calibration options. Rather than solely finding the needle in the haystack, the role of AI should be to clear the haystack, enabling users to easily spot the needle on their own. The goal is to empower users by showing them the capabilities of AI and enhancing their own ability to make informed decisions.

Crafting human-centric AI experiences

1. Start with the User and Context

Machine learning alone cannot determine the problems to solve or address unstated needs.

We still need to do all that hard work to find human needs. This involves engaging in activities like contextual inquiries, interviews, surveys, and analyzing customer support tickets and logs.

When developing AI products, it's important to begin with the problem. This means putting the user at the center of the development process and using empathy as a core value.

- Where do user needs intersect with the strengths of AI?

Gather input from a wide range of users during the early stages of product development.

By incorporating diverse perspectives, you can ensure that you don't overlook significant market opportunities and prevent unintentional exclusion of specific user groups in your designs. - How can I identify new opportunities for AI?

One effective approach to identifying opportunities for AI to enhance the user experience is by mapping the current workflow of a task.

By observing how people currently complete a process, you can gain insights into the necessary steps involved and identify areas that could benefit from automation or augmentation.

If you already have an AI-powered product, it's important to validate your assumptions through user research. Consider conducting tests where users can interact with your product, either through actual automation or a simulated (like a "Wizard of Oz" test) to get their reactions and get feedback on the results. - Does AI add Value? Can it solve this problem in a unique way?

Once you've identified the area you want to improve, evaluate which solutions can benefit from AI, which can be enhanced by AI, and which solutions may not gain any advantages or, even make it worse, with AI.

Question whether integrating AI into your product will genuinely enhance it. In many cases, a rule-based solution can be just as effective, if not more so, than an AI-based solution.

Opting for a simpler approach offers the advantages of easier development, explanation, debugging, and maintenance. Take the time to critically assess how introducing AI to your product may improve or potentially hinder the user experience.

2. Set the right expectations

Clearly communicating the capabilities and limitations of AI software is crucial to managing user expectations. Over-promising and under-delivering can lead to frustration and disappointment, whereas being transparent and honest about what the AI can and cannot do will help to build trust and confidence in the system.

Set clear expectations by communicating the product's capabilities and limitations early on. Instead of focusing on the technology, emphasize the benefits for the users.

Since AI systems operate on probabilities, there may be instances where the system produces incorrect or unexpected results. Hence, it becomes crucial to assist users in managing their expectations regarding the functionality and output of the system. This can be achieved by being open and transparent about what the system can and cannot do.

- Confidence indicators

By using terms like "suggested," "hint," or presenting confidence scores, you can effectively communicate the level of assurance users should place in the recommendations. This approach helps manage expectations, ensuring that users are not excessively disappointed if the suggestions turn out to be inaccurate.

- Clear Communication:

Clearly convey the capabilities and limitations of the AI system to users. Provide accurate and transparent information about what the AI can and cannot do. It's important to address any misconceptions users may have about AI, whether it's excessive trust or lack thereof.

- Avoid the personality trap

Make sure that the AI seamlessly integrates with your product without forcing human-like personality into its features. Adding artificial wit and charm may only cause confusion among users and create unrealistic expectations. Stay true to your existing brand values to maintain consistency and user trust.

- Ownership of Mistakes

When the AI software makes errors or fails to meet user expectations, it's important to take responsibility for those mistakes. Instead of blaming the user, the software should acknowledge its limitations and shortcomings.

For example using phrases like “I’m sorry but the system seems to be having difficulty” lets the user know the system realizes it is to blame, not the them.

3. Make AI less of a black box

AI systems should offer understandable explanations for their decisions and actions. This can be achieved through user-friendly interfaces, tooltips, or informative messages that provide insights into how the AI arrived at a specific result.

Since AI/ML models often work as black boxes, it can be challenging to explain their results. Despite inputting large amounts of data, the inner workings remains mysterious. Furthermore, interaction effects between features can complicate explanations, as they reveal relationships that go beyond the individual impact of each feature. As a result, explaining the complete behavior of such models becomes difficult or even impossible.

- Optimize for explainability

Develop tools or features that allow users to explore and interpret the AI's decision-making process. This could include displaying relevant factors, highlighting influential features, or offering alternative scenarios to understand the system's behavior better or leveraging other in-product flows, such as onboarding, to explain AI systems.

- Use understandable language

Avoid using complex technical jargon when communicating with users. Use plain language and simple explanations that can be easily understood by a broad audience, regardless of their technical expertise.

- Present explanations relative to user action

When users take actions and receive immediate responses from the AI system, it becomes easier for them to understand cause and effect. Providing explanations in such moments helps establish or regain user trust. Similarly, when the system is functioning well, offering guidance on how users can support its reliability reinforces their confidence.

- Simplifying and displaying relevant results to aid decision-making

Prioritize important information by identifying the key factors that influence the decision-making process and emphasize them in the displayed results. This helps users focus on the most critical aspects. You can also consider intelligent filtering and sorting mechanisms to present the most relevant information first. This reduces cognitive overload and allows users to quickly access the data they need to make informed decision

- Communicate Data sources when possible

Building trust can be achieved by transparently informing the user about the data types included into the results. By doing so, users can recognize that the decisions are grounded in variables they would consider themselves when making similar choices. Letting users know which data the model uses helps them recognize when they have important information that the system may not account for. This prevents users from relying too much on the system in certain situations.

- Communicate the privacy and security settings

Clearly communicate the privacy and security settings related to user data. Provide explicit information on what data is shared and what data remains private.

If you were presented with both results, which one would you be more inclined to understand & trust ?

4. Give users control and freedom

Maintaining a balance between automation and user control is crucial for human-centric AI systems. Users should always feel that they have the final say in decision-making and can override the AI's suggestions if necessary. By keeping users in control, developers can create AI products that empower and enhance human capabilities without diminishing the importance of human input.

- User Feedback and Correction

Enable users to provide feedback on the AI system's outputs and allow them to correct or modify the AI-generated results. This feedback loop not only improves the accuracy and relevance of the AI software but also gives users a sense of control and the opportunity to fine-tune the system's behavior.

- Recognize user preferences for control

When designing AI-powered products, it's tempting to automate tasks that people currently do manually. For example, a music app that generates themed song collections to save users time and effort. However, it's important to understand that users may have preferences regarding automation and control, regardless of whether AI is involved.

People might prefer to maintain control when they’re enjoying that specific task, or when the stakes of the situation are high, or when they want to take ownership and see their vision come to life. On the other hand, users might be let go of control when the task is mundane, unenjoyable, or beyond their capabilities.

- Opt-In and Opt-Out Mechanisms

Give users the ability to opt in or opt out of specific AI features or functionalities. For example, allow users to choose whether they want personalized recommendations, targeted advertisements, or AI-powered automation. This respects user preferences and ensures they have the freedom to engage with the AI software according to their comfort level.

5. Graceful handling of errors & failures

As users engage with your product, they will explore it in ways that you may not have anticipated during the development phase. Misunderstandings, false starts, and mistakes are bound to occur, making it essential to design for these scenarios as an integral part of a user-centered product.

If you’re a cup-half full type of person, then errors, in fact, can be seen as valuable opportunities. They facilitate rapid learning through experimentation, aid in developing accurate mental models, and encourage users to provide feedback, thereby contributing to product improvement.

- Understand who made the error

A little bit earlier in the article i mentionned “the ownership of mistakes”, and there’s a little bit more to it. In the context of AI products, three types of errors can occur : user, system, and context errors. Before designing error responses, identify user-perceivable or predicted errors, and then categorize them as follows:

System Limitation: System inherently unable to provide correct or any answers due to limitations.

Context: User perceives error due to unexplained actions, mismatched mental model, or flawed assumptions.

Background Errors: System and user unaware of incorrect system functioning.

- Offer ways to move forward after a failure

Offer actionable suggestions or alternative paths that users can take to resolve or mitigate the issue. Provide guidance on how they can proceed or recover from the failure. Additionally, seek to collect feedback from users about their experiences with failures and use that feedback to enhance the AI system. The goal being to continuously update and refine the system to minimize future failures and improve user satisfaction.

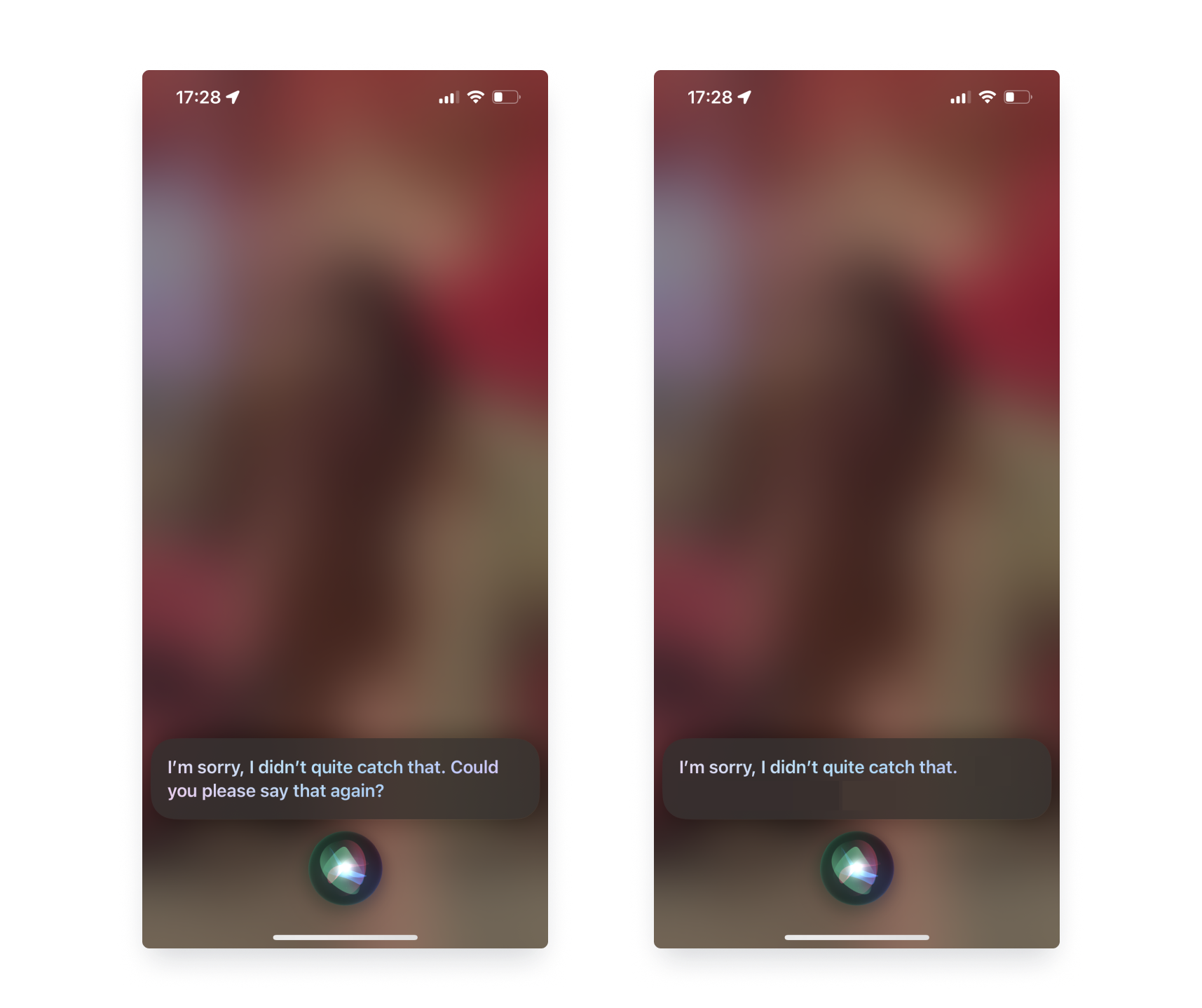

What If siri didn’t offer a “fallback” after not understanding the users’ input ? Users would be left without any guidance or assistance, leading to confusion and difficulty in achieving their desired tasks.